SQL Server

https://www.mssqltips.com/sqlservertip/3307/managing-the-size-of-the-sql-server-ssis-catalog-database/

https://www.mssqltips.com/sqlservertip/3307/managing-the-size-of-the-sql-server-ssis-catalog-database/ Escenario

I have implemented the SSIS catalog that comes with SQL Server 2012 and I love the reporting and centralization that comes with it, however, I noticed after a few months that the database was growing exponentially. How can I keep the size of this database to a minimum?Configuration

The SSIS catalog is the central point for working with Integration Services (SSIS) projects that you’ve deployed to the SSIS server. When the SSIS catalog is implemented, the SSISDB database is automatically created. The scope of this tip will focus on the SSISDB growth more than the SSIS catalog itself.

SSIS projects, packages, parameters, environments, and operational history are all stored in the SSISDB database so if you have hundreds of SSIS packages or packages that run every few minutes you could see how the database storing all the historical information would grow exponentially.

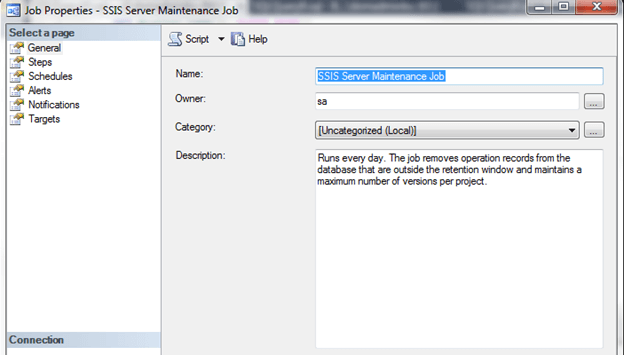

Also included when you enable this feature is a SQL Server Agent Job called SSIS Server Maintenance job:

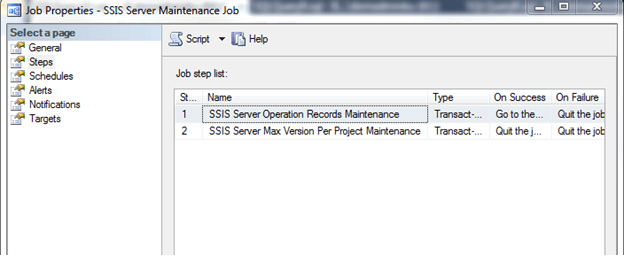

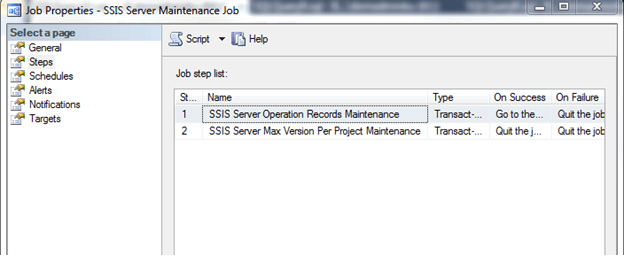

Inside this job are two steps, SSIS Server Operation Records Maintenance and SSIS Server Max Version Per Project Maintenance, that will help clean up the database. By default, this job is set to run at 12:00am nightly which is sufficient:

Inside this job are two steps, SSIS Server Operation Records Maintenance and SSIS Server Max Version Per Project Maintenance, that will help clean up the database. By default, this job is set to run at 12:00am nightly which is sufficient:

Looking at the first step, SSIS Server Operations Records Maintenance, you will notice that it executes a stored procedure named internal.cleanup_server_retention_window. This sounds like it could be the stored procedure that cleans up history:

Looking at the first step, SSIS Server Operations Records Maintenance, you will notice that it executes a stored procedure named internal.cleanup_server_retention_window. This sounds like it could be the stored procedure that cleans up history: